Building a Github Chat Interface with LangChain, LM Studio, and FAISS

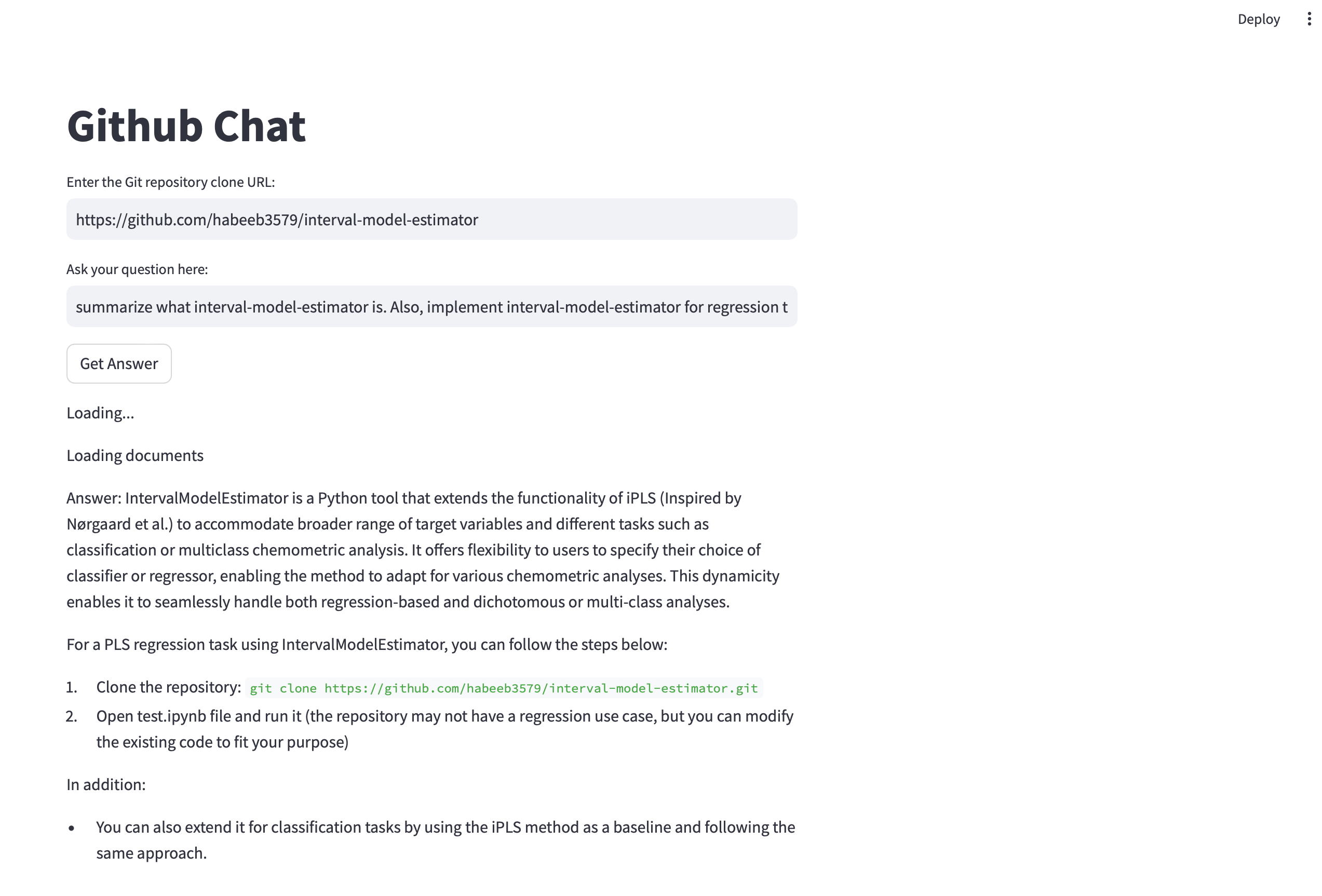

In this tutorial, we’ll explore using LangChain, LM Studio, and FAISS to create a chat interface for interacting with Github repositories. This project will involve cloning a Github repo using GitLoader from LangChain, splitting the repository into chunks of text with a recursive text splitter, creating a vector database for these split documents using FAISS, and connecting user queries to a local LM Studio for answering questions about the repository. Finally, we’ll deploy the project using Docker and create a Streamlit app where users can supply a Github repo and ask questions about it.

Cloning Github Repo with LangChain’s GitLoader

We’ll use LangChain’s GitLoader to clone the desired Github repository. This allows us to access the repository’s contents programmatically. You may need to authenticate with github (refer to GitPython)

Connecting User Queries to LM Studio

Users will interact with the chat interface by supplying questions about the Github repository. These queries will be connected to a local instance of LM Studio (defined as llm), a powerful language model fine-tuned for generating human-like responses. LM Studio will answer the user’s questions based on the information stored in the vector database.

Note: repo_path is the path to save the cloned repo and branch is the branch of the repo to be cloned.

Splitting Repo into Text Chunks

Next, we’ll use a recursive text splitter to divide the repository’s contents into manageable text chunks. This step is crucial for processing and analyzing large volumes of text efficiently. {::nomarkdown}

{:/nomarkdown}

Note that we overlap each chunk by 20 characters to capture more context about the chunks.

Creating Vector Database with FAISS

Once we have the text chunks, we’ll create a vector database using FAISS.

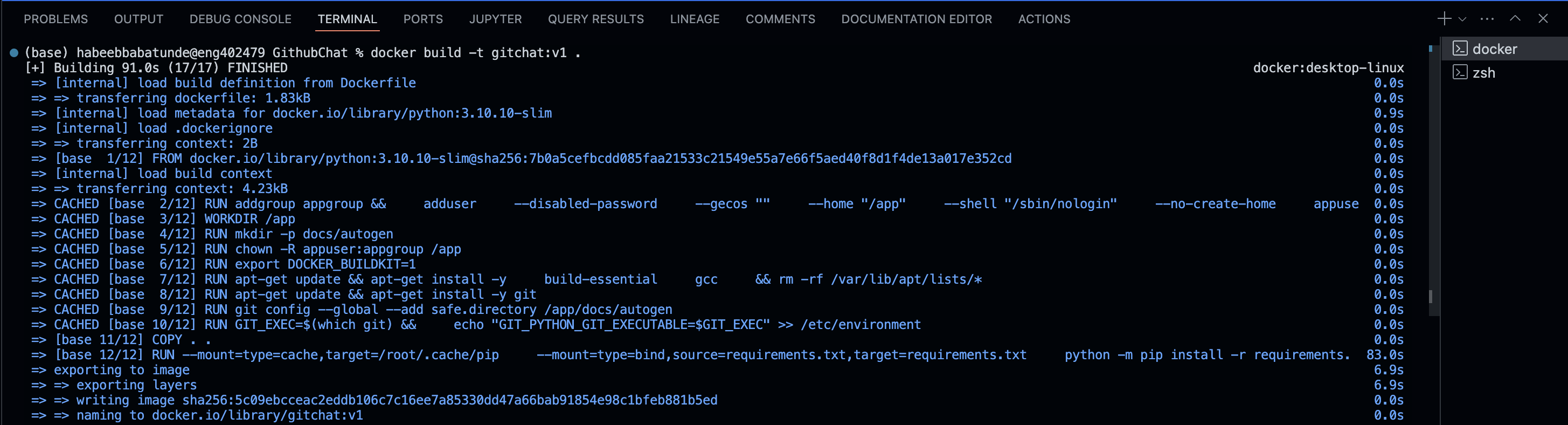

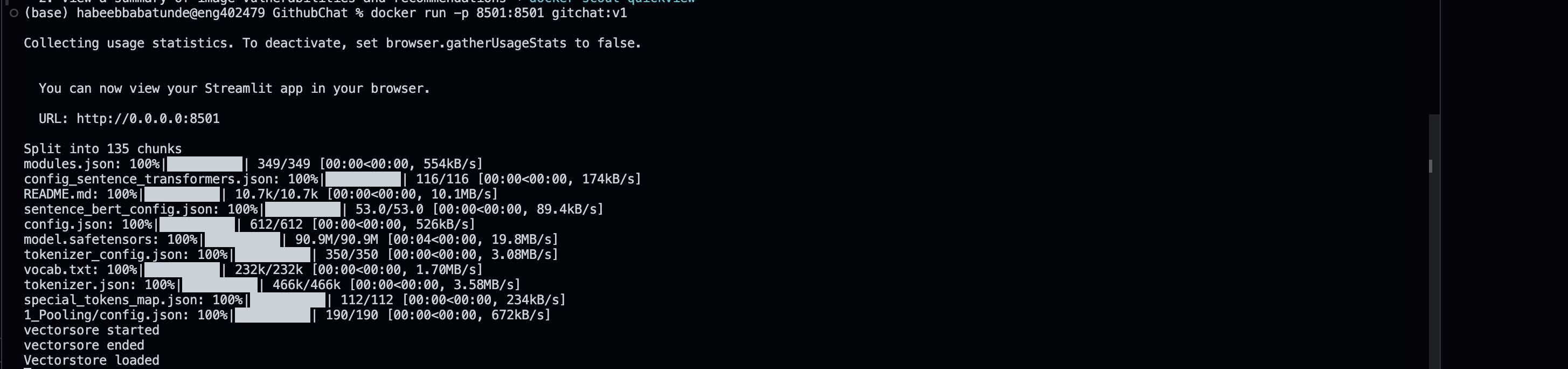

Building Streamlit App and Docker Deployment

Finally, we’ll build a user-friendly Streamlit app where users can input the Github repository and their questions. The app will communicate with the backend components, including LangChain, FAISS, and LM Studio, to provide seamless interaction.

We’ll containerize the entire project using Docker for easy deployment and scalability.

Once the docker is up and running, the app can be accessed at at port 8501

The full implementation of the components of the project will be uploaded on my GitHub page!